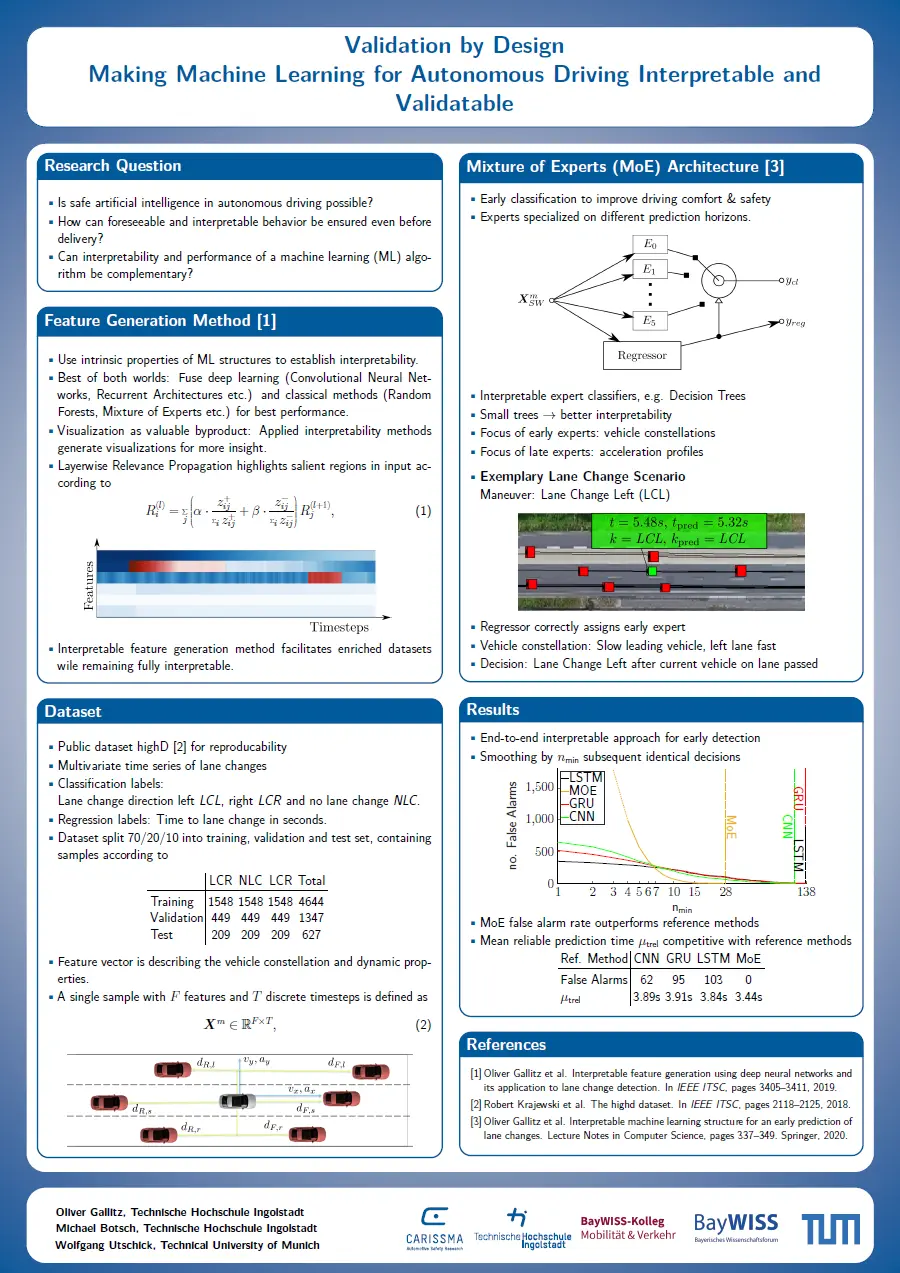

The introduction of automated driving goes hand in hand with a drastically increasing complexity on multiple levels. One of the main reasons for this is the necessity to not only keep track of a vehicle’s own states, but also several other, sometimes unknown, surrounding objects. In order to appropriately tackle this complexity, algorithms that implement Machine Learning (ML) based methods are applied. ML is being increasingly used for the tasks of perception, situation awareness and trajectory planning , as they all need to process very large amounts of data.

Most ML methods, such as deep neural networks, ensemble methods or reinforcement learning are considered black box algorithms, as their underlying calculation models are not based on real-world physical rules, but on the provided training data. This makes it hard to interpret the results and to validate the software functions in accordance to standards such as the ISO 26262.

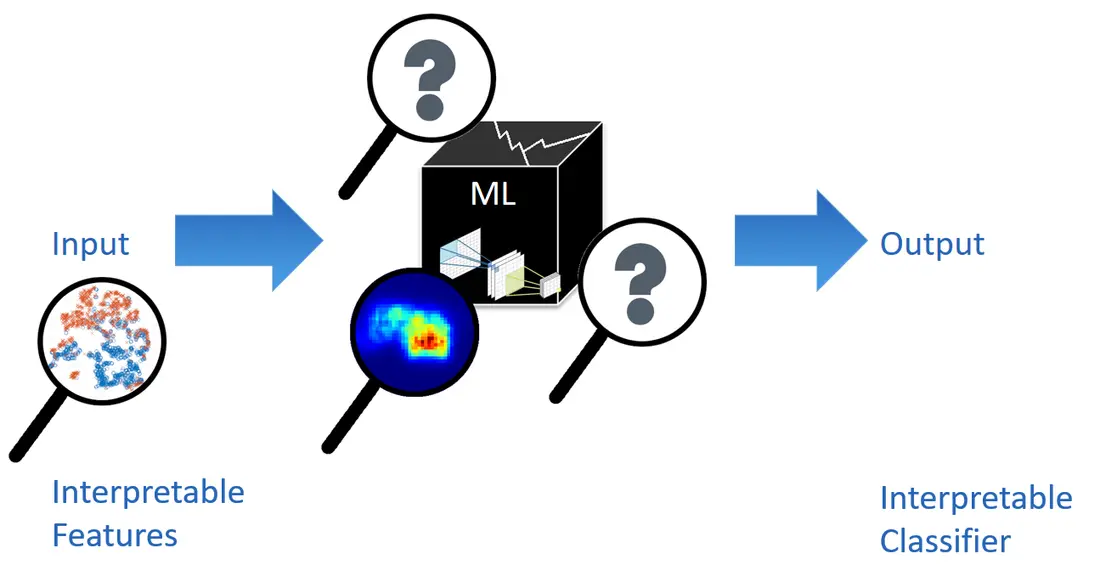

A common approach in order to generate interpretable and validatable software functions that are based on ML is to reduce the complexity by limiting the model to shallow architectures. In this project, we are attempting to create an approach towards the validation of safety-critical functions that can also be applied to deep and complex ML algorithms by introducing constraints already in the design phase of the algorithms. For example, deep learning can be used to identify relevant interpretable features which can be used subsequently to generate validatable classifiers.

![[Translate to English:] Logo Akkreditierungsrat: Systemakkreditiert](/fileadmin/_processed_/2/8/csm_AR-Siegel_Systemakkreditierung_bc4ea3377d.webp)

![[Translate to English:] Logo IHK Ausbildungsbetrieb 2023](/fileadmin/_processed_/6/0/csm_IHK_Ausbildungsbetrieb_digital_2023_6850f47537.webp)